Why SCOPE-RL?#

Motivation#

Sequential decision making is ubiquitous in many real-world applications, including healthcare, education, recommender systems, and robotics. While a logging or behavior policy interacts with users to optimize such sequential decision making, it also produces logged data valuable for learning and evaluating future policies. For example, a medical agency often records patients’ condition (state), the treatment chosen by the expert or behavior policy (action), the patients’ health index after the treatment such as vitals (reward), and the patients’ condition in the next time period (next state). Making most of these logged data to evaluate a counterfactual policy is particularly beneficial in practice, as it can be a safe and cost-effective substitute for online A/B tests or clinical trials.

An example of sequential decision makings in real-world applications

Off-Policy Evaluation (OPE), which studies how to accurately estimate the performance of an evaluation policy using only offline logged data, is thus gaining growing interest. Especially in the reinforcement learning (RL) setting, a variety of theoretically grounded OPE estimators have been proposed to accurately estimate the expected reward [4, 5, 6, 7, 11, 12, 13]. Moreover, several recent work on cumulative distribution OPE [8, 9, 10] also aim at estimating the cumulative distribution function (CDF) and risk functions (e.g., variance, conditional value at risk (CVaR), and interquartile range) of an evaluation policy. These risk functions provide informative insights on policy performance, especially from safety perspectives, which are thus crucial for practical decision making.

Unfortunately, despite these recent advances in OPE of RL policies, only a few existing platforms [25, 26] are available for extensive OPE studies and benchmarking experiments. Moreover, those existing platform lacks the following important properties:

Most offline RL plaforms:

… provide only a few basic OPE estimators.

Existing OPE platforms:

… have limited flexibility in the choices of environments and offline RL methods (i.e., limited compatibility with gym/gymnasium and offline RL libraries).

… support only the standard OPE framework and lack the implementation of cumulative distribution OPE.

… only focus on the accuracy of OPE/OPS and do not take the risk-return tradeoff of the policy selection into account.

… do not support user-friendly visualization tools to interpret the OPE results.

… do not provide well-described documentations.

It is critical to fill the above gaps to further facilitate OPE research and its practical applications. This is why we build SCOPE-RL, the first end-to-end platform for offline RL and OPE, which puts an emphasis on the OPE modules.

Key contributions#

The distinctive features of our SCOPE-RL platform are summarized as follows.

Below, we describe each advantage one by one. Note that for a quick comparison with the existing platforms, please refer to the following section.

End-to-end implementation of Offline RL and OPE#

While existing platforms support flexible implementations on either offline RL or OPE, we aim to bridge the offline RL and OPE processes and streamline an end-to-end procedure for the first time. Specifically, SCOPE-RL mainly consists of the following four modules as shown in the bottom figure:

Workflow of offline RL and OPE streamlined by SCOPE-RL

Dataset module

Offline Learning (ORL) module

Off-Policy Evaluation (OPE) module

Off-Policy Selection (OPS) module

First, the Dataset module handles the data collection from RL environments. Since our Dataset module is compatible with OpenAI Gym or Gymnasium-like environments, SCOPE-RL is applicable to a variety of environmental settings. Moreover, SCOPE-RL supports compatibility with d3rlpy, which provides implementations of various online and offline RL algorithms. This also allows us to test the performance of offline RL and OPE with various behavior policies or other experimental settings.

Next, the ORL module provides an easy-to-handle wrapper for learning new policies with various offline RL algorithms. While d3rlpy has already supported user-friendly APIs, their implementation is basically intended to use offline RL algorithms one by one. Therefore, to further make the end-to-end offline RL and OPE processes smoothly connected, the implemented OPL wrapper enables us to handle multiple datasets and multiple algorithms in a single class.

Finally, the OPE and OPS modules are particularly our focus. As we will review in the following sub-sections, we implement a variety of OPE estimators from the basic choices [4, 5, 6, 7], advanced ones [12, 13, 14, 15, 16], and estimators for the cutting-edge cumulative distribution OPE [9, 10]. Moreover, we provide the meta-class to handle OPE/OPS experiments and the abstract base implementation of OPE estimators. This allows researchers to quickly test their own algorithms with this platform and also helps practitioners empirically learn the property of various OPE methods.

Four key features of OPE/OPS modules of SCOPE-RL

Variety of OPE estimators and evaluation protocol of OPE#

SCOPE-RL provides the implementation of various OPE estimators in both discrete and continuous action settings. In the standard OPE, which aims to estimate the expected performance of the given evaluation policy, we implement the OPE estimators listed below. These implementations are as comprehensive as the existing OPE platforms including [25, 26].

Example of estimating policy value using various OPE estimators

See also

The detailed descriptions of each estimator and evaluation metrics are in Supported Implemetation (OPE/OPS).

Basic estimators

(abstract base)

Trajectory-wise Importance Sampling (TIS) [5]

Per-Decision Importance Sampling (PDIS) [5]

Self-Normalized Trajectory-wise Importance Sampling (SNTIS) [5, 11]

Self-Normalized Per-Decision Importance Sampling (SNPDIS) [5, 11]

State Marginal Estimators

(abstract base)

State Marginal Direct Method (SM-DM) [12]

State Marginal Self-Normalized Importance Sampling (SM-SNIS) [12, 13]

State Marginal Self-Normalized Doubly Robust (SM-SNDR) [12, 13]

Spectrum of Off-Policy Evaluation (SOPE) [14]

State-Action Marginal Estimators

(abstract base)

State-Action Marginal Importance Sampling (SAM-IS) [12]

State-Action Marginal Doubly Robust (SAM-DR) [12]

State-Action Marginal Self-Normalized Importance Sampling (SAM-SNIS) [12]

State-Action Marginal Self-Normalized Doubly Robust (SAM-SNDR) [12]

Spectrum of Off-Policy Evaluation (SOPE) [14]

Double Reinforcement Learning

Double Reinforcement Learning [15]

Weight and Value Learning Methods

Minimax Q-Learning and Weight Learning (MQL/MWL) [12]

High Confidence OPE

Moreover, we streamline the evaluation protocol of OPE/OPS with the following metrics.

OPE metrics

Type I and Type II Error Rates

OPS metrics (performance of top \(k\) deployment policies)

{Best/Worst/Mean/Std} of policy performance

Safety violation rate

Sharpe ratio (our proposal)

Note that, among the above top-\(k\) metrics, SharpeRatio is the proposal in our research paper ” Towards Assessing and Benchmarking Risk-Return Tradeoff of Off-Policy Evaluation”. The page: Risk-Return Assessments of OPE via SharpeRatio@k describe the above metrics and the contribution of SharpeRatio@k in details. We also discuss these metrics briefly in the later sub-section.

Cumulative Distribution OPE for risk function estimation#

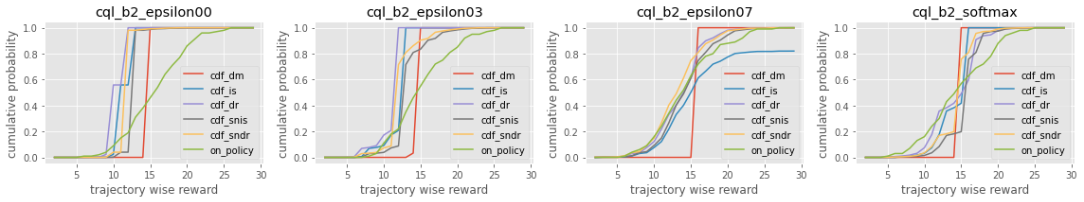

Besides the standard OPE, SCOPE-RL differentiates itself from other OPE platforms by supporting the cumulative distribution OPE for the first time. Roughly, cumulative distribution OPE aims to estimate the whole performance distribution of the policy performance, not just the expected performance as the standard OPE does.

Example of estimating the cumulative distribution function (CDF) via OPE

By estimating the cumulative distribution function (CDF), we can derive the following statistics of the policy performance:

Mean (i.e., policy value)

Variance

Conditional Value at Risk (CVaR)

Interquartile Range

Knowing the whole performance distribution or deriving the risk metrics including CVaR is particularly beneficial in a real-life situation where safety matters. For example, in recommender systems, we are interested in stably providing good-quality products rather than sometimes providing an extremely good one while sometimes hurting user satisfaction seriously with bad items. Moreover, in self-driving cars, catastrophic accidents should be avoided even if the probability is small (e.g., less than 10%). We believe that the release of cumulative distribution OPE implementations will boost the applicability of OPE in practical situations.

Risk-Return Assessments of OPS#

Our SCOPE-RL is also unique in that it enables risk-return assessments of Off-Policy Selection (OPS).

While OPE is useful for estimating the policy performance of a new policy using offline logged data, OPE sometimes produces erroneous estimation due to counterfactual estimation and distribution shift between the behavior and evaluation policies. Therefore, in practical situations, we cannot solely rely on OPE results to choose the production policy, but instead, combine OPE results and online A/B tests for policy evaluation and selection [27]. Specifically, the practical workflow often begins by filtering out poor-performing policies based on OPE results, then conducting A/B tests on the remaining top-\(k\) policies to identify the best policy based on the more reliable online evaluation, as illustrated in the following figure.

Practical workflow of policy evaluation and selection

While the conventional metrics of OPE focus on the “accuracy” of OPE and OPS measured by mean-squared error (MSE) [25, 28], rank correlation [24, 26], and regret [29, 30], we measure risk, return, and efficiency of the selected top-\(k\) policy with the following metrics.

Example of evaluating OPE/OPS methods with top-\(k\) risk-return tradeoff metrics

best @ \(k\) (return)

worst @ \(k\), mean @ \(k\), std @ \(k\) (risk)

safety violation rate @ \(k\) (risk)

Sharpe ratio @ \(k\) (efficiency, our proposal)

See also

Among the top-\(k\) risk-return tradeoff metrics, SharpeRatio is the main proposal of our research paper “Towards Assessing and Benchmarking Risk-Return Tradeoff of Off-Policy Evaluation”. We describe the motivation and contributions of the SharpeRatio metric in Risk-Return Assessments of OPE via SharpeRatio@k.

Comparisons with the existing platforms#

Finally, we provide a comprehensive comparison with the existing offline RL and OPE platforms.

Comparing SCOPE-RL with existing offline RL and OPE platforms

The criteria of each column is given as follows:

“data collection”: ✅ means that the platform is compatible with Gymnasium environments [31] and thus is able to handle various settings.

“offline RL”: ✅ means that the platform implements a variety of offline RL algorithms or the platform is compatible with one of offline RL libraries. In particular, our SCOPE-RL supports compatibility to d3rlpy [32].

“OPE”: ✅ means that the platform implements various OPE estimators other than the standard choices including Direct Method [4], Importance Sampling [5], and Doubly Robust [6]. (limited) means that the platform supports only these standard estimators.

“CD-OPE”: is the abbreviation of Cumulative Distribution OPE, which estimates the cumulative distribution function of the return under evaluation policy [9, 10].

In summary, our unique contributions are (1) to provide the first end-to-end platform for offline RL, OPE, and OPS, (2) to support cumulative distribution ope for the first time, and (3) to implement (the proposed) SharpeRatio@k and other top- \(k\) risk-return tradeoff metics for the risk assessments of OPS. Additionally, we provide a user-friendly visualization tools, documentation, and quickstart examples to facilitate a quick benckmarking and practical application. We hope that SCOPE-RL will serve as an important milestone for the future development of OPE research.

Note that the compared platforms include the following:

(offline RL platforms)

(application-specific testbeds)

(OPE platforms)

Remark

Our implementations are highly inspired by OpenBanditPipeline (OBP) [40], which has demonstrated success in enabling flexible OPE experiments in contextual bandits. We hope that SCOPE-RL will also serve as a quick prototyping and benchmarking toolkit for OPE of RL policies, as done by OBP in non-RL settings.